Evolving Dark’s tracing system

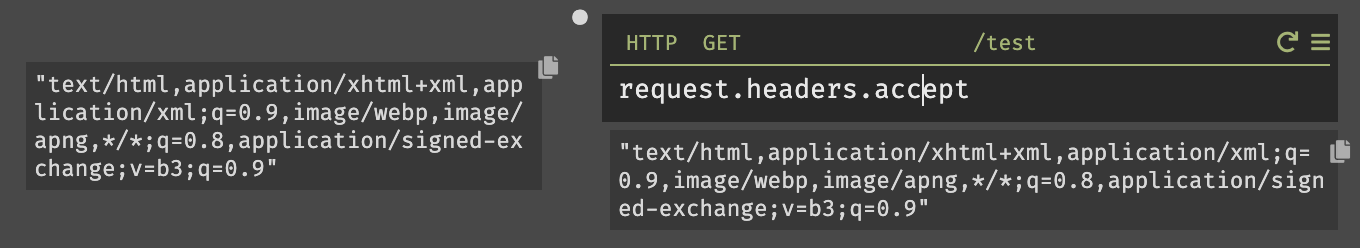

One of the things that makes Dark truly unique is what we call "Trace-driven development". The best way to write a HTTP handler in Dark is to start by making a request to the non-existent handler, then:

- using the 404s list to create the handler

- using the actual trace value to see the output of your code as you type it.

We use the trace system a lot, and it's pretty great. It acts as a sort of omniscient debugger: you don't need to start it, you can go back in time easily, you don't need print statements. You can even see the control-flow of your application.

Like most things in Dark today, the trace system was built using the simplest, most obvious implementation possible. As we've grown quite considerably since then, we need to ensure that traces continue to scale well, which they currently do not.

This post is discoveries about what's not working, and ramblings about what the next gen should be.

Cleanup

Dark stores basically every request that is made to it. And it stores it in the database. While this data is important, it isn't the same level of importance as user data. Storing useful and volumous data in the same DB as a much lower volume of extremely precious data is not a great idea.

To avoid the DB blowing up in size (and price) we go through the DB and garbage collect it pretty much continuously. We keep the last 10 requests, and also keep any requests that were made in the last week.

We have struggled to make this not be incredibly buggy. The logic is tricky, and mostly written in SQL whose performance is iffy and which hides quite a few footguns. As a result, the requests to delete data are slow (this garbage collector provides the majority of the load on our database, interestingly) and also locks quite a bit (though I'm systematically working through this in a recent PR).

It can also be hard to identify what data to delete. When we started, we didn't know how we wanted traces to work, and so went with an implementation that stored a trace using the path of the URL requested. This worked well initially, especially as it allowed for easily transitioning a 404 (essentially, a trace with no owner) to a new handler, but had weird behaviour when you changed a handler's route (losing all its traces!). Alas, URLs also support wildcards, and so this meant that in order to find out whether a trace should be deleted, we basically had to recreate the entire routing business logic in the DB.

My thinking here is to associate the trace with the actual handler it hits. That way we're not recreating the business logic, but we'd need a separate 404 storage (although this is probably simpler in the long run). It also changes the behaviour when you "rename" a handler, which you sometimes do early in development; the new behaviour would be to keep the existing traces, which honestly is a much more user-friendly behaviour.

Storage

One of the problems is that we're storing the data in a DB. This sort of log data, which is mostly immutable, should be stored somewhere more appropriate, like S3 (we use Google Cloud, so Cloud Storage in our case). This was also a pattern from the early days of CircleCI - we started by saving build logs in the DB, before moving them to S3.

That would also allow us to send traces to the client without going through the server, which has operational problems of its own. This solves a big problem for customers with larger traces, which can time out when loading from our server. Since Dark is basically unusable without traces (you cant use autocomplete well without them, for instance), solving this is pretty important.

The other upside of this is that rather than running a GC process to clear up the DB (which doesn't even do a great job, as the DB will continue to hold onto the space), using something like S3 would allow us to have lifecycle policies to automatically clean up this data.

One of the problems here is that traces aren't quite immutable. You can -- by intention -- change the contents of a trace. While the initial input is immutable, you can re-run a handler using the same inputs, which currently overwrites the same trace (users have found this dumb, so losing this behaviour is probably an improvement).

You can also run a function you just wrote, adding it to the trace. This behaviour actually is good - it's a key part of Trace-driven development that you start with a partial trace based on your inputs, and then start to build it up as you write code.

My current thinking is to add the concept of a trace "patch". If you run something on top of the trace, we store the "patch" in the DB and resolve/combine the "base trace" and its patches in the client.

Expiration

The GC process isn't a great feature. While it would be much better if it didn't hit the DB at all, it would be even better if it didn't exist. Cloud Storage/S3 have expiration policies, which can automatically delete data without having to go through an expensive GC process.

One issue would be that we don't want the latest ten traces (or some number) to expire. I haven't fully thought this one through, but it seems doable.

You can sign up for Dark here, and check out our progress in these features in our contributor Slack or by watching our GitHub repo. Comment here or on Twitter.